Thursday 5PM (ET): The one and only Stephen Wolfram goes deep on AI

Friday 2PM (ET): Disco CEO Evin McMullen explains how to prove you're human in an AI world.

Inside Today's Meteor

- Disrupt: New Releases from Adobe, NVIDIA and more

- Create: New Work from AI Artists Wayfinder and Viola Rama

- Compress: Steve Jobs Gets His ChatGPT Debut

Disrupt: Six AI Releases in 48 Hours

It's only Wednesday, and we have major AI releases from Adobe, RunwayML, NVIDIA, Google, Microsoft and the open source Alpaca project. In this morning's Meteor, we're going to break down the big launches and show you some examples of what these creative power tools can do.

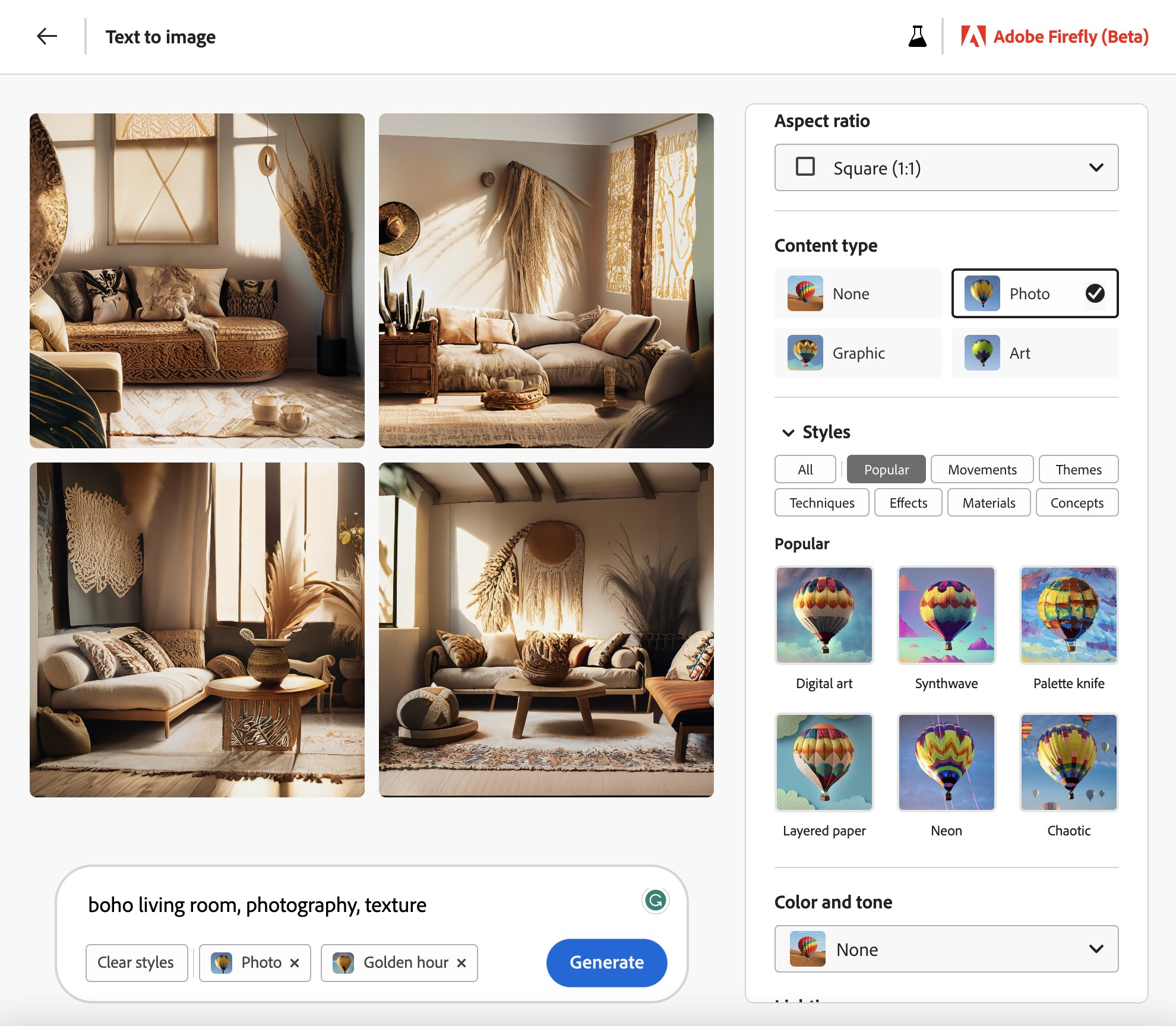

Adobe FireFly

First up is Adobe. To date, the Photoshop creator has let independent developers release plugins that bring some of the AI image capabilities of DALLE-2 and Stable Diffusion to the world's most popular creative tool.

That ends now. Adobe has publicly announced the limited beta of Firefly. The platform appears to be a greatest hits of AI imaging tools with a few new twists thrown in. Adobe hopes to differentiate on two different vectors. One, they are bringing polished UX to a field that today relies on crowded discord servers and hard-to-install open source libraries.

Perhaps more importantly, Adobe claims they have trained their models completely on licensed images, either from their stock collections or from creative commons images on the open net. They say there will be a compensation scheme, but haven't revealed how it works just yet. It's a shot across the bow to Stability.ai, OpenAI and Midjourney which have all come under scrutiny (and lawsuit) for using billions of images scraped from the Internet without asking permission or providing compensation to their owners.

Some early testers have said Firefly's engine isn't familiar with as many concepts as the others. Youtuber BillyFX said it could not reproduce muscle cars for example. But we've also seen some pretty stunning images coming out, which means it's possible to train stable diffusion models in a fully ethical way. It will be interesting to see if others follow suit.

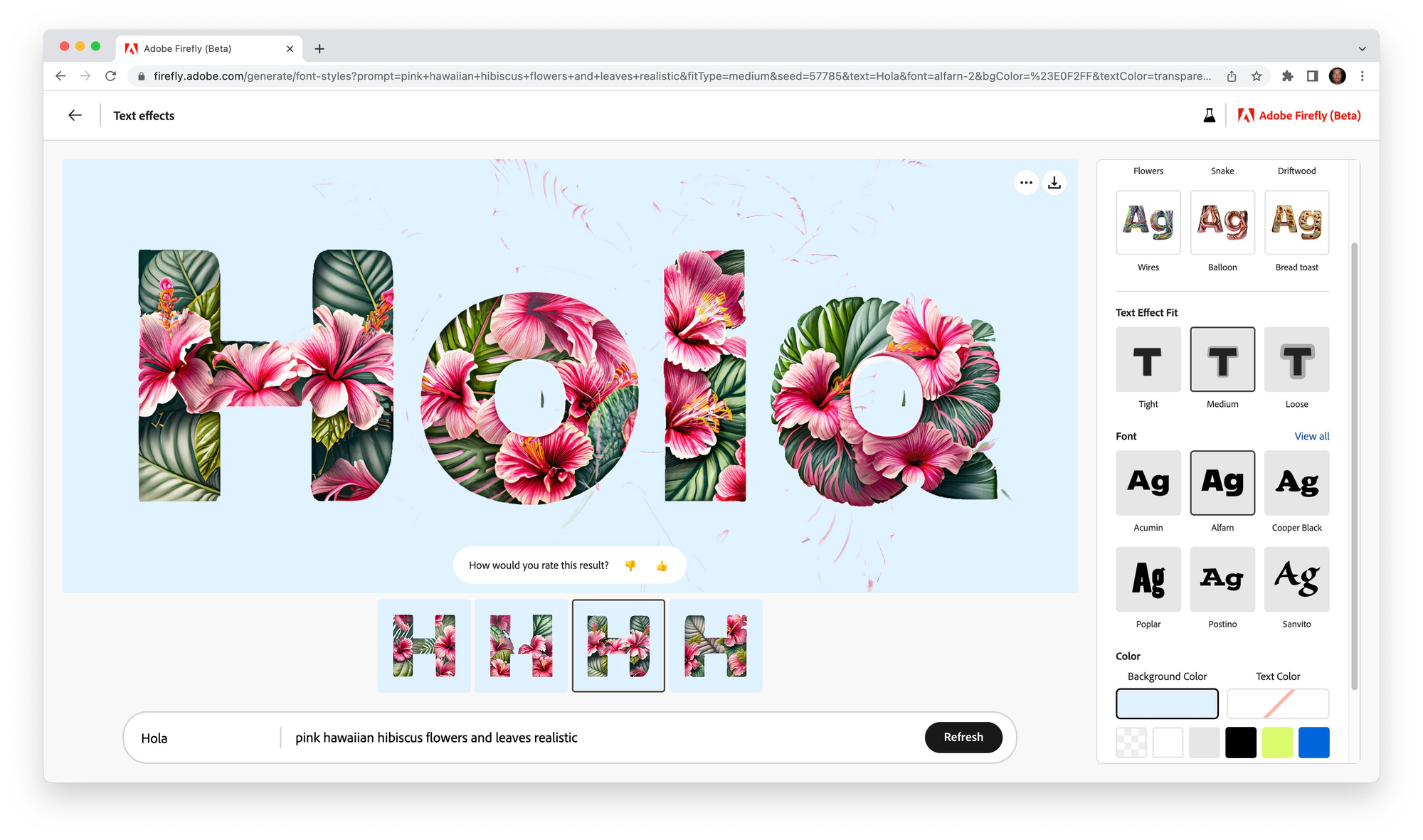

Adobe also has a few other new tricks, including using AI to craft highly illustrative typography something the other engines struggle mightily with. Firefly can't yet create it's own typography, but reportedly that's in the works.

RunwayML

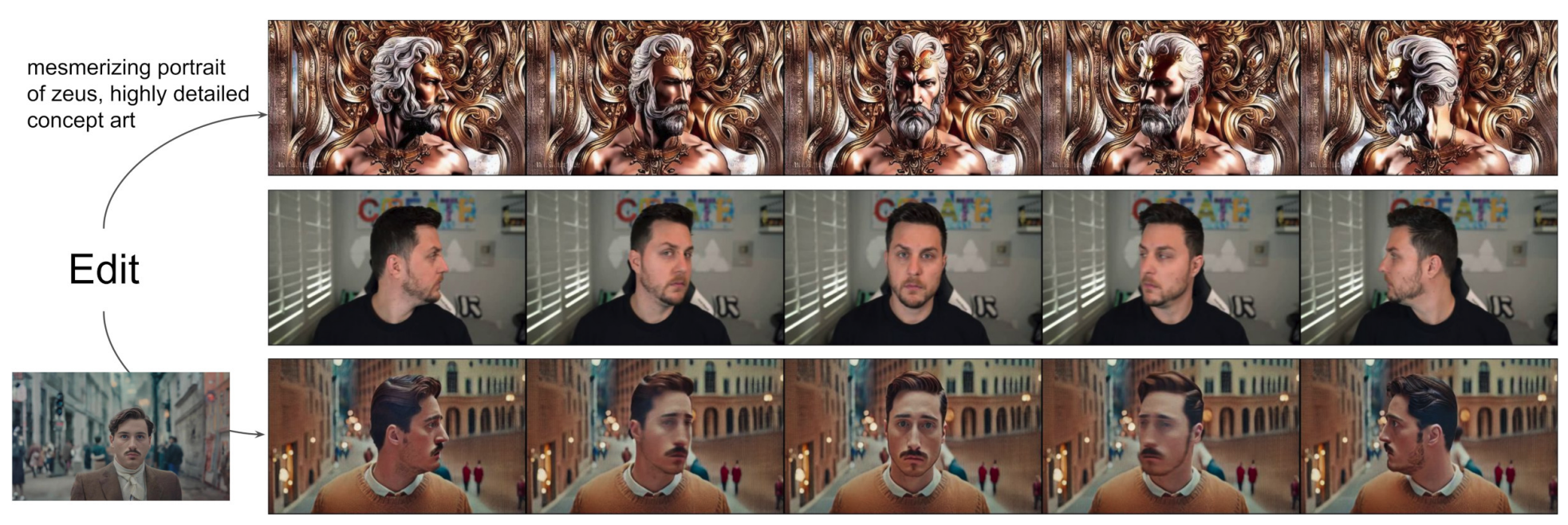

Next up is RunwayML. You might recall us discussing the beta release of their breakthrough text-to-video product just a few weeks ago called Gen-1. Now, even before Gen-1 is publicly released, the company is touting Gen-2. It's hard to tell whether this is actually a new product or just an improvement in Gen-1 after beta users have been banging against it for a few weeks.

For those needing a quick refresher, text-to-video is the ability to type in any prompt, like we do with stills in Midjourney or conversations with DALLE-2, and out pops a fully rendered. video of whatever your string of words has conjured up.

The basic premise of Gen-1 is you can feed it a control video, which usually dictates motion and composition, and a target image from which it draws aesthetic guidance and the engine creates a reasonably blend of the two.

Gen-2 seems to take this to the next level, allowing you to skip the control video step and just type in whatever you'd like it to make.

To be clear this isn't movie theather quality footage. But if the rapid advancement of image engines is any guide, that reality is months, not years away.

NVIDIA

Chip maker NVIDIA also stepped into the fray this week, announcing the AI Foundations cloud service which lets companies with large data sets train their own AI language and image models. Getty and Shutterstock have signed up to build their own image models based on their stock libraries, similar to what Adobe just launched. Adobe has also signed onto NVIDIAs service, but it's not clear if they are using it for their current launch or for future features across Photoshop and Premiere.

More interestingly, NVIDIA announced the expansion of BioNeMo, an AI engine trained on molecular biology, chemistry and protein engineering. Pharma shops can bring their private data sets to the party to turbocharge drug discovery. Amgen, Evozyne and Insilico Medicine are already on board, according to Drug Discovery & Development.

If it works, it could dwarf the achievements of the current generation of LLMs and diffusion image models which have pumped out smart computer code, cool graphics and mediocre screen plays but definitely aren't curing cancer.

Google and Microsoft

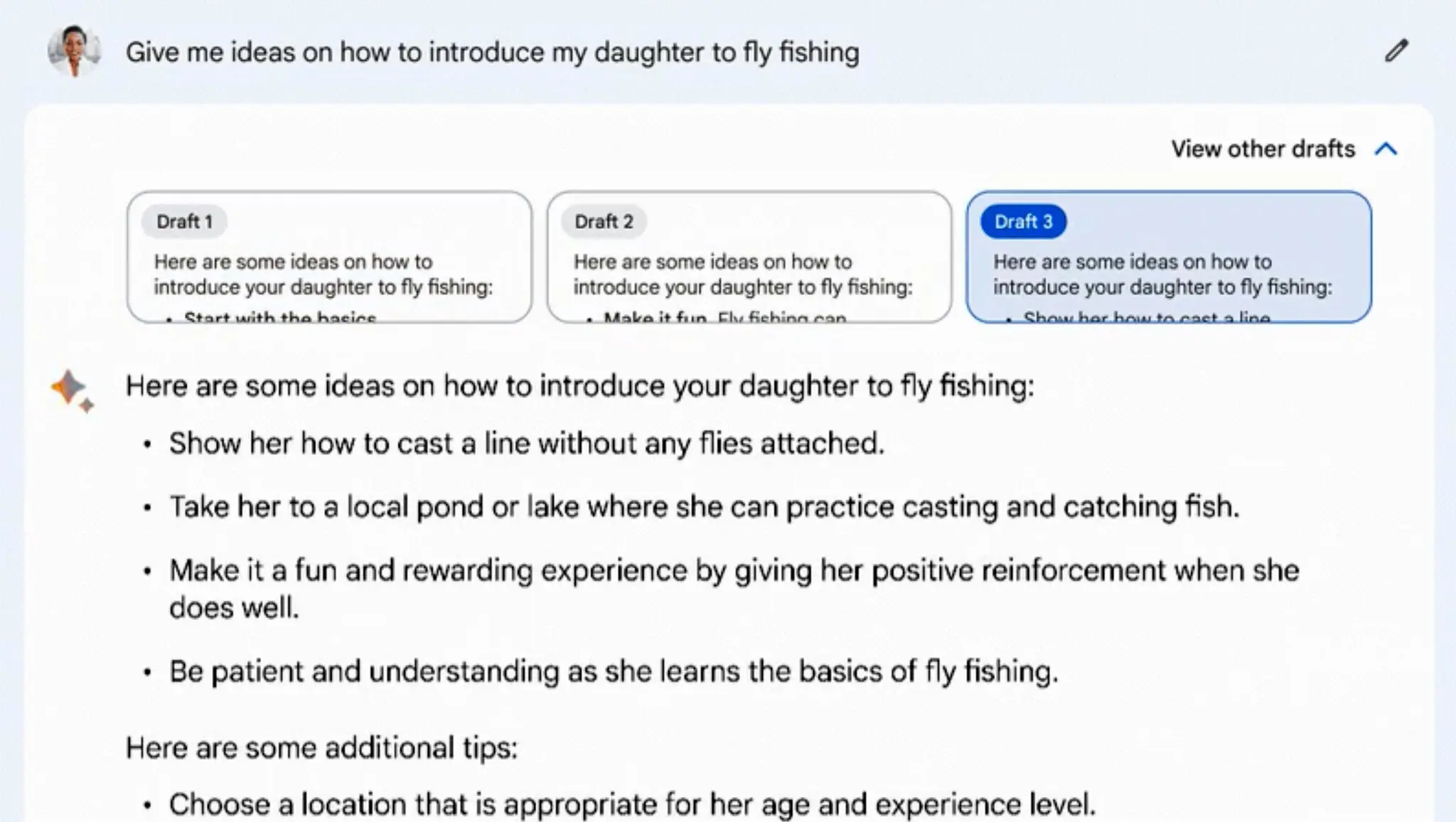

The two giants battling for AI supremacy seem to issue dueling press releases each week. Yesterday, Google announced they were opening up beta access to Bard, their AI-powered answer to Bing's GPT chatbot. The announcement came with the usual disclaimers: it will make mistakes; it's still learning; don't trust it for anything useful.

Meanwhile Microsoft announced AI image creation is now possible from within Bing search, which seems to be morphing into less of a search engine and more of a portal for many AI services. Users can access it only via the Edge browser, similar to Bing GPT.

Alpaca

And finally, we wrote extensively yesterday on Alpaca, the open source project from Stanford researchers that coaxes ChatGPT into training Meta's large language model with the reward being a high performance chatbot that can run on a regular PC or smartphone. That model is called Alapaca 7B because it runs on seven billion parameters.

What a difference a day makes.

Today we hear word of Alpaca 30B which runs on 4X more parameters than yesterday's model. It's not quite as lean. No iPhone here. We're talking $20K of hardware, but nothing compared to the tens or hundreds of millions of dollars OpenAI and Stability.AI spent on compute to train their models. Stay tuned to see what Alpaca 30B can do.

Create

New art from @_wayfinder which he describes as "a reinterpretation of Goya's series of prints protesting the violence and brutality of war." Available now on Objkt.com.

By Viola Rama.

Compress

ChatGPT Gets the Steve Jobs Treatment

ChatGPT + Steve Jobs voice + FB Messenger = creepy but amazing tech demo. It's creator, tech entrepreneur John Meyer, was inspired by the loss of his father suddenly a few years ago and his desire to reconnect with some part of him.

"There was a lot left un-said, and a lot I wish I could've spoken to him about in my adult life — like, how to raise kids, or build a successful company. And so far, I can say it's a really warm, loving, and enjoyable experience to be able to talk to him again...not to mention surreal."

GPT Better Have My Money

The white hat hackers won $123K using ChatGPT to write code that broke into industrial systems. The Pwn2Own contest brings ethical hackers together to improve security and show off their collective skills.