Join us for our first Twitter Space today at 11AM PST, Art of the Steal? Deep inside the NFT trials that could change it all, with NYU Law Professor Michael Kasdan (@michaelkasdan)

“There are open questions about the impact of our work on ongoing lawsuits against StabilityAI, OpenAI, GitHub, etc. Specifically, models that memorize some of their training points may be viewed differently under statutes like GDPR, US trademark + copyright, and more.”

- Eric Wallace, PhD student at Berkeley AI Research and co-author on a new study finding models like DALL-E2 produce some images nearly identical to those it was trained on, which may be private or copyrighted.

Inside Meteor Today

- Create: #BlackFutureMonth artist Elise Swopes is tired of fixing "bullshit problems"

- Think: AI pumps the breaks

- Compress: Jack Dorsey backs a Twitter competitor

- Disrupt: Is the Dodo bird coming back?

Create

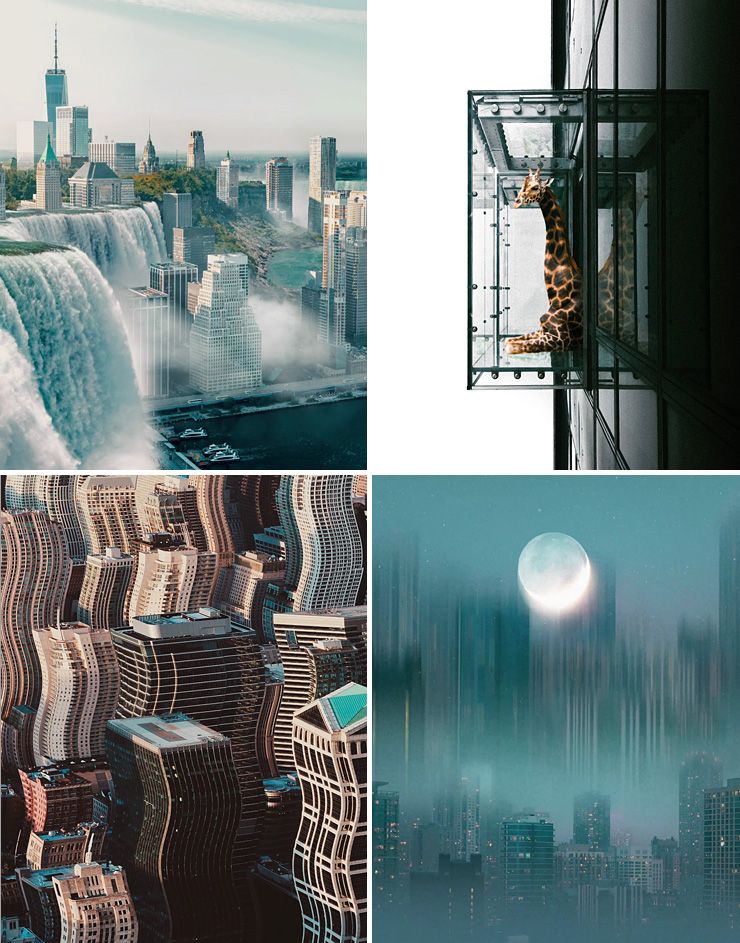

Black Future Month: Artist Spotlight, Elise Swopes

Elise Swopes is a digital artist and Instagram influencer with a mission: to solve “bullshit problems,” like building support for NFT artists of color in a space dominated by stereotypically white, male crypto bros.

Part of her solution was founding the Sunrise Art Club, a creative impact art agency supporting underserved artists who she says are typically black creators. The club offers live workshopping and other events for artists; one of its projects is titled NOTY, for Night on the Yard, featuring curated work from incarcerated artists.

“(It’s) creating an economy that we obviously have to do ourselves,” Swopes says.

The 33-year-old’s own work often features cityscapes captured while “piloting drones, scaling skyscrapers, or hanging out of helicopters.”

Swopes is enthusiastic about the many new opportunities the NFT world presents for artists of color.

“We’re able to talk more with each other, to build with each other, to connect… And I think that, if anything, is the real decentralization, where we can finally speak openly and access our own shit and monetize our own shit.”

Find all of Swopes' work here.

– Rebekah Sager

World building with Opus text-to-video AI

We're buildling a text to video games tool with the vision to reignite renaissance of design systems 👇pic.twitter.com/85XhuNK77t https://t.co/EKdQtz8fTF

— OPUS - Text-to-Video-Games (@OpusAIInc) January 12, 2023

Have artists and art projects we should feature? Send them to evan@thisismeteor.com

Think

AI Takes a Moment to Ponder its Collateral Damage

It's rare for tech to admit it is going too fast, but many of the new new things in AI are collectively pumping the brakes.

On Jan. 23, startup Elevenlabs blew up the Internet with a free to use beta voice cloning app that could put words into other people's mouths. By Jan. 31, the app was behind a paywall after some people decided to put bad words into celebrities' mouths.

Elevenlabs then posted a lengthy Twitter thread all about it and what they planned to do (a lot but it won't work perfectly).

After moving fast and breaking things for years, AI companies are pausing to pick up some pieces. Some, like Elevenlabs, are even pondering if it might be possible to stuff the genie back in the bottle.

Really impressed by the acting you can generate with @elevenlabsio #texttospeech! These are #AIvoices generated from text—dozens of "takes" stitched together. Breakdown thread: 1/8 #syntheticvoices #ainarration #autonarrated #aicinema #aiartcommunity #aiia #ai #MachineLearning pic.twitter.com/Xm8WWyJnur

— CoffeeVectors (@CoffeeVectors) February 1, 2023

Consider Stability.ai. It scraped 5 billion images from the public Internet for its magical text to image app Stable Diffusion and the result was a flurry of copyright infringement suits. Now the company seems to have taken a step back. Its most recent 2.0 release has been panned by AI artists, who prefer the older 1.5 version. Word is the updated app uses a new dataset that attempted to exclude copyrighted works, which wound up making it worse.

In a recent interview, OpenAI founder and CEO Sam Altman tried to counter the perception of ChatGPT as just another example of the "move fast, break things" ethos famously espoused by Facebook founder Mark Zuckerberg. While it had that effect, it was not the intent, he told StrictlyVC in January.

"One of the things we really believe is that the most responsible way to put this out in society is very gradually...rather than drop a super powerful AGI [Artificial General Intelligence] in the world all at once," he said. "And so we put GPT3 out almost three years ago, and then we put it into an API [two and half years ago]... And the incremental update from that to ChatGPT I felt like should have been predictable, and I want to do more introspection on why I was sort of mis-calibrated on that."

It seems obvious in retrospect that the miscalculation was around public reception, media cycles and going hyper viral – the parts of culture that engineers don't tend to have a great handle on.

Now, OpenAI is trying to do some damage control.

When Chat-GPT freaked out teachers and writers everywhere last year, a college student produced a detector for AI-generated text in short order. This week, OpenAI released its own free to use classifier for distinguishing between human and AI-drafted text. The release comes with a big, bolded caveat: “Our classifier is not fully reliable.”

In early evaluations, the tool correctly identified text as “likely AI-written” just 26 percent of the time while incorrectly labeling human-written text as AI-generated nine percent of the time. Further, the classifier is “very unreliable on short texts (below 1,000 characters).”

OpenAI says it is making the tool publicly available to gather feedback. For what it’s worth, it classified this piece as “very unlikely” AI-generated.

Meanwhile, when Google announced a remarkable new text to music AI algorithm last week, it declined to make it available to the general public, citing copyright concerns, leading to groans of disappointment.

In this rare moment of reflection, it’s worth considering what other indirect impacts of this technology will soon surface. Forget cheating on homework, we need to worry about the potential for whole new forms of cyber warfare.

Research has found that a minuscule amount of malicious data can “poison” a training set in an attack on the entire AI system. AI also provides a powerful new tool for bad actors to conduct phishing attacks and automated hacking campaigns.

We’ve seen this all before. The harms of social media were obvious long before Zuck was summoned to Capitol Hill. – Eric Mack and Evan Hansen

We are accepting story pitches. Hit us up at evan@thisismeteor.com.

Compress

ChatGPT Says Pay Me

Wait list opens for the pro version of their API, starting with a $20/month price tag.

Jack Dorsey Swings at Twitter Boyfriend Elon

Damus, a new decentralized social media network backed by Dorsey, debuts.

Bitcoin Does NFTs Too Now

Some people are upset.

Intelligent Agents Might Actually Be Intelligent

Predictika promises more conversational virtual assistants to guide you through the uncanny valley of customer service.

MetaKey Launches NFT to "Unlock" Multiple Metaverses

Never get locked out of your virtual house again.

Good Break Down of the Many Flavors of AI for Your Perplexed Relatives

You'll thank us at family dinners.

Does the Future of AI Belong to Big Tech?

The New Yorker looks at open source speech recognition AI Whisper.cpp

and comes to a surprising conclusion.

Disruptions

Return of the Dodo Bird

The company working to resurrect the wooly mammoth is also going to work on bringing back the dodo bird.

Lift Off for Musk

Elon could fire up all 33 engines on his biggest toy very soon.

Thanks for reading! Please take just 30 seconds to tell us what you love or hate about this issue.